What better way to ring in the new year than to announce that Louis Preonas, Matt Woerman, and I have posted a new working paper, "Panel Data and Experimental Design"? The online appendix is here (warning - it's math heavy!), and we've got a software package, called pcpanel, available for Stata via ssc, with the R version to follow.*

TL;DR: Existing methods for performing power calculations with panel data only allow for very limited types of serial correlation, and will result in improperly powered experiments in real-world settings. We've got new methods (and software) for choosing sample sizes in panel data settings that properly account for arbitrary within-unit serial correlation, and yield properly powered experiments in simulated and real data.

The basic idea here is that researchers should aim to have appropriately-sized ("Goldilocks") experiments: too many participants, and your study is more expensive than it should be; too few, and you won't be able to statistically distinguish a true treatment effect from zero effect. It turns out that doing this right gets complicated in panel data settings, where you observe the same individual multiple times over the study. Intuitively, applied econometricians know that we have to cluster our standard errors to handle arbitrary within-unit correlation over time in panel data settings.** This will (in general) make our standard errors larger, and so we need to account for this ex ante, generally by increasing our sample sizes, when we design experiments. The existing methods for choosing sample sizes in panel data experiments only allow for very limited types of serial correlation, and require strong assumptions that are unlikely to be satisfied in most panel data settings. In this paper, we develop new methods for power calculations that accommodate the panel data settings that researchers typically encounter. In particular, we allow for arbitrary within-unit serial correlation, allowing researchers to design appropriately powered (read: correctly sized) experiments, even when using data with complex correlation structures.

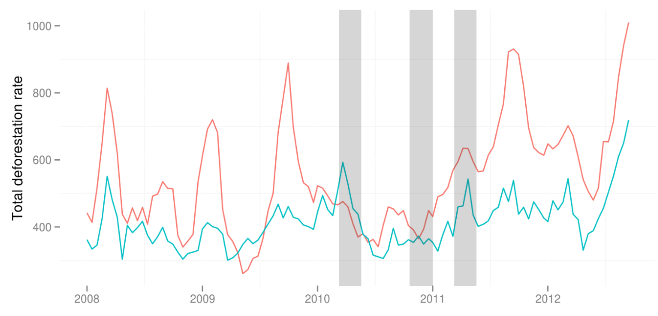

I prefer pretty pictures to words, so let's illustrate that. The existing methods for power calculations in panel data only allow for serial correlation that can be fully described with fixed effects - that is, once you put a unit fixed effect into your model, your errors are no longer serially correlated, like this:

But we often think that real panel data exhibits more complex types of serial correlation - things like this:

Okay, that's a pretty stylized example - but we usually think of panel data being correlated over time - electricity consumption data, for instance, generally follows some kind of sinusoidal pattern; maize prices in East Africa at a given market typically exhibit correlation over time that can't just be described with a level shift; etc; etc; etc. And of course, in the real world, data are never nice enough that including a unit fixed effect can completely account for the correlation structure.

So what happens if I use the existing methods when I've got this type of data structure? I can get the answer wildly wrong. In the figure below, we've generated some difference-in-difference type data (with a treatment group that sees treatment turn on halfway through the dataset, and a control group that never experiences treatment) with a simple AR(1) process, calculated what the existing methods imply the minimum detectable effect (MDE) of the experiment should be, and simulated 10,000 "experiments" using this treatment effect size. To do this, we implement a simple difference-in-difference regression model. Because of the way we've designed this setup, if the assumptions of the model are correct, every line on the left panel (which shows realized power, or the fraction of these experiments where we reject the null of no treatment) should be at 0.8. Every line on the right panel should be at 0.05 - this shows the realized false rejection rate, or what happens when we apply a treatment effect size of 0.

Adapted from Figure 2 of Burlig, Preonas, Woerman (2017). The y-axis of the left panel shows the realized power, or fraction of the simulated "experiments" described above that reject the (false) null hypothesis; the y-axis of the right panel shows the realized false rejection rate, or fraction of simulated "experiments" that reject the true null under a zero treatment effect. The y-axis in both plots is the number of pre- and post-treatment periods in the "experiment." The colors show increasing levels of AR(1) correlation. If the Frison and Pocock model were performing properly, we should expect all of the lines on the left panel to be at 0.80, and all of the lines in the right panel to be at 0.05. Because we're clustering our standard errors in this setup, the right panel is getting things right - but the left panel is wildly off, because the FP model doesn't account for serial correlation.

We're clustering our standard errors, so as expected, our false rejection rate is always right at 0.05. But we're overpowered in short panels, and wildly underpowered in longer ones. The easiest way to think about statistical power is that if you aimed to be powered at 80% (generally the accepted standard, and meaning that you'll fail to reject a false null 20% of the time), you're going to fail to reject the null - even when there is a true treatment effect - 20% of the time. So that means if you end up powered to, say, 20%, as happens with some of these simulations, you're going to fail to reject the (false) null 80% of the time. Yikes! What's happening here is essentially that by not taking serial correlation into account, in long panels, we think we can detect a smaller effect than we actually can. Because we're clustering our standard errors, though, our false rejection rate is disciplined - so we get stars on our estimates way less often than we were expecting.***

By contrast, when we apply our "serial-correlation-robust" method, which takes the serial correlation into account ex ante, this happens:

Adapted from Figure 2 of Burlig, Preonas, Woerman (2017). Same y- and x-axes as above, but now we're able to design appropriately-powered experiments for all levels of AR(1) correlation and all panel lengths - and we're still clustering our standard errors, so the false rejection rates are still right. Whoo!

That is, we get right on 80% power and 5% false rejection rates, regardless of the panel length and strength of the AR(1) parameter. This is the central result of the paper. Slightly more formally, our method starts with the existing power calculation formula, and extends it by adding three terms that we show are sufficient to characterize the full covariance structure of the data (see Equation (8) in the paper for more details).

If you're an economist (and, given that you've read this far, you probably are), you've got a healthy (?) level of skepticism. To demonstrate that we haven't just cooked this up by simulating our own data, we do the same thought experiment, but this time, using data from an actual RCT that took place in China (thanks, QJE open data policy!), where we don't know the underlying correlation structure. To be clear, what we're doing here is taking the pre-experimental period from the actual experimental data, and calculating the minimum detectable effect size for this data using the FP model, and again using our model.**** This is just like what we did above, except this time, we don't know the correlation structure. We non-parametrically estimate the parameters that both models need in order to calculate the minimum detectable effect. The idea here is to put ourselves in the shoes of real researchers, who have some pre-existing data, but don't actually know the underlying process that generated their data. So what happens?

The dot-dashed line shows the results when we use the existing methods; the dashed line shows the results when we guess that the correlation structure is AR(1); and the solid navy line shows the results using our method.

Adapted from Figure 3 of Burlig, Preonas, Woerman (2017). The axes are the same as above. The dot-dashed line shows the realized power, over varying panel lengths, of simulated experiments using the Bloom et al (2015) data in conjunction with the Frison and Pocock model. The dashed line instead assumes that the correlation structure in the data is AR(1), and calibrates our model under this assumption. Neither of these two methods performs particularly well - with 10 pre and 10 post periods, the FP model yields experiments that are powered to ~45 percent. While the AR(1) model performs better, it is still far off from the desired 80% power. In contrast, the solid navy line shows our serial-correlation-robust approach, demonstrating that even when we don't know the true correlation structure in the data, our model performs well, and delivers the expected 80% power across the full range of panel lengths.

Again, only our method achieves the desired 80% power across all panel lengths. While an AR(1) assumption gets closer than the existing method, it's still pretty off, highlighting the importance of thinking through the whole covariance structure.

In the remainder of the paper, we A) show that a similar result holds using high-frequency electricity consumption data from the US; B) show that collapsing your data to two periods won't solve all of your problems; C) think through what happens when using ANCOVA (common in economics RCTs) -- there are some efficiency gains, but much fewer than you'd think if you ignored the serial correlation; and D) couch these power calculations in a budget constrained setup to think about the trade-offs between more time periods and more units. *wipes brow*

A few last practical considerations that are worth addressing here:

- All of the main results in the paper (and, indeed, in existing work on power calculations) are designed for the case where you know the true parameters governing the data generating process. In the appendix, we prove that (with small correction factors) you can also use estimates of these parameters to get to the right answer.

- People are often worried enough about estimating the one parameter that the old formulas needed, let alone the four that our formula requires. While we don't have a perfect answer for this (more data is always better), simply ignoring these additional 3 parameters implicitly assumes they're zero, which is likely wrong. The paper and the appendix provide some thoughts on dealing with insufficient data.

- Estimating these parameters can be complicated...so we've provided software that does it for you!

- We'd also like to put in a plug for doing power calculations by simulation when you've got representative data - this makes it much easier to vary your model, assumptions on standard errors, etc, etc, etc.

Phew - managed to get through an entire blog post about econometrics without any equations! You're welcome. Overall, we're excited to have this paper out in the world - and looking forward to seeing an increasing number of (well-powered) panel RCTs start to hit the econ literature!

* We've debugged the software quite a bit in house, but there are likely still bugs. Let us know if you find something that isn't working!

** Yes, even in experiments. Though Chris Blattman is (of course) right that you don't need to cluster your standard errors in a unit-level randomization in the cross-section, this is no longer true in the panel. See the appendix for proofs.

*** What's going on with the short panels? Short panels will be overpowered in the AR(1) setup, because a difference-in-differences design is identified off of the comparison between treatment and control in the post-period vs this same difference in the pre-period. In short panels, more serial correlation means that it's actually easier to identify the "jump" at the point of treatment. In longer panels, this is swamped by the fact that each observation is now "worth less". See Equation (9) of the paper for more details.

**** We're not actually saying anything about what the authors should have done - we have no idea what they actually did! They find statistically significant results at the end of the day, suggesting that they did something right with respect to power calculations, but we remain agnostic about this.

Full disclosure: funding for this research was provided by the Berkeley Initiative for Transparency in the Social Sciences, a program of the Center for Effective Global Action (CEGA), with support from the Laura and John Arnold Foundation.